Let  be points in

be points in  . If we denote by

. If we denote by  the reflection point of

the reflection point of  with respect to the unit ball, i.e.

with respect to the unit ball, i.e.

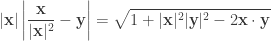

we then have the following well-known identity

.

.

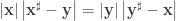

The proof of the above identity comes from the fact that

.

.

Indeed, by squaring both sides of

we arrive at

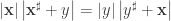

which is obviously true. Similarly, the last identity also holds. If we replace  by

by  we also have

we also have

.

.

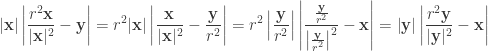

Generally, if we consider the reflection point of  over a ball

over a ball  , i.e.

, i.e.

we still have the fact

.

.

Indeed, one gets

.

.

Similarly,

.

.

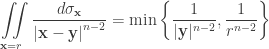

Such identity is very useful. For example, in  (

( ) the following holds

) the following holds

.

.

This type of formula has been considered before when  here. For a general case, Lieb and Loss introduced another method in their book published by AMS in 2001. Here we introduce a completely new proof. At first, if

here. For a general case, Lieb and Loss introduced another method in their book published by AMS in 2001. Here we introduce a completely new proof. At first, if  by the potential theory, one easily gets

by the potential theory, one easily gets

.

.

If  , one needs to make use of the reflection point of

, one needs to make use of the reflection point of  and the above identity to go back to the first case. The point here is

and the above identity to go back to the first case. The point here is  . The integral is obviously continuous as a function of

. The integral is obviously continuous as a function of  . The above argument is due to professor X.X.W.

. The above argument is due to professor X.X.W.

is a function and

is a

-form, then

is again a vector field on

. To prove this, we simply apply the previous identity with

replaced by

to get the desired result.