A root-finding algorithm is a numerical method, or algorithm, for finding a value  such that

such that  , for a given function

, for a given function  . Such an

. Such an  is called a root of the function

is called a root of the function  .

.

Suppose  is a continuous function defined on the interval

is a continuous function defined on the interval ![[a, b]](https://s0.wp.com/latex.php?latex=%5Ba%2C+b%5D&bg=ffffff&fg=333333&s=0&c=20201002) , with

, with  and

and  of opposite sign. By the Intermediate Value Theorem, there exists a number

of opposite sign. By the Intermediate Value Theorem, there exists a number  in

in  with

with  . Although the procedure will work when there is more than one root in the interval

. Although the procedure will work when there is more than one root in the interval  , we assume for simplicity that the root in this interval is unique. The method calls for a repeated halving of subintervals of

, we assume for simplicity that the root in this interval is unique. The method calls for a repeated halving of subintervals of ![[a, b]](https://s0.wp.com/latex.php?latex=%5Ba%2C+b%5D&bg=ffffff&fg=333333&s=0&c=20201002) and, at each step, locating the half containing

and, at each step, locating the half containing  .

.

To begin, set

To begin, set  and

and  , and let

, and let  be the midpoint of

be the midpoint of ![[a,b]](https://s0.wp.com/latex.php?latex=%5Ba%2Cb%5D&bg=ffffff&fg=333333&s=0&c=20201002) ; that is,

; that is,

.

.

If  , then

, then  , and we are done. If

, and we are done. If  , then

, then  has the same sign as either

has the same sign as either  or

or  . When

. When  and

and  have the same sign,

have the same sign,  , and we set

, and we set  and

and  . When

. When  and

and  have opposite signs,

have opposite signs,  , and we set

, and we set  and

and  . We then reapply the process to the interval

. We then reapply the process to the interval ![[a_2, b_2]](https://s0.wp.com/latex.php?latex=%5Ba_2%2C+b_2%5D&bg=ffffff&fg=333333&s=0&c=20201002) .

.

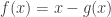

A number p is a fixed point for a given function g if  . In this section we consider the problem of finding solutions to fixed-point problems and the connection between the fixed-point problems and the root-finding problems we wish to solve. Root-finding problems and fixed-point problems are equivalent classes in the following sense:

. In this section we consider the problem of finding solutions to fixed-point problems and the connection between the fixed-point problems and the root-finding problems we wish to solve. Root-finding problems and fixed-point problems are equivalent classes in the following sense:

Given a root-finding problem  , we can define functions

, we can define functions  with a fixed point at

with a fixed point at  in a number of ways, for example, as

in a number of ways, for example, as  or as

or as  . Conversely, if the function

. Conversely, if the function  has a fixed point at

has a fixed point at  , then the function defined by

, then the function defined by  has a zero at

has a zero at  .

.

Although the problems we wish to solve are in the root-finding form, the fixed-point form is easier to analyze, and certain fixed-point choices lead to very powerful root-finding techniques.

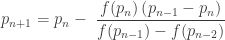

Newton’s (or the Newton-Raphson) method is one of the most powerful and well-known numerical methods for solving aroot-finding problem. With an initial approximation  , the Newton’s method generates the sequence

, the Newton’s method generates the sequence  by

by

.

.

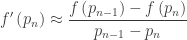

To circumvent the problem of the derivative evaluation in Newton’s method, we introduce a slight variation. By definition,

.

.

Letting  , we have

, we have

which yields

.

.

- The method of False Position

The method of False Position (also called Regula Falsi) generates approximations in the same manner as the Secant method, but it includes a test to ensure that the root is bracketed between successive iterations. Although it is not a method we generally recommend, it illustrates how bracketing can be incorporated.

First choose initial approximations  and

and  with

with  . The approximation

. The approximation  is chosen in the same manner as in the Secant method, as the

is chosen in the same manner as in the Secant method, as the  -intercept of the line joining

-intercept of the line joining  and

and  . To decide which secant line to use to compute

. To decide which secant line to use to compute  , we check

, we check  . If this value is negative, then

. If this value is negative, then  and

and  bracket a root, and we choose

bracket a root, and we choose  as the

as the  -intercept of the line joining

-intercept of the line joining  and

and  . If not, we choose

. If not, we choose  as the

as the  -intercept of the line joining

-intercept of the line joining  and

and  , and then interchange the indices on

, and then interchange the indices on  and

and  .

.

In a similar manner, once  is found, the sign of

is found, the sign of  determines whether we use

determines whether we use  and

and  or

or  and

and  to compute

to compute  . In the latter case a relabeling of

. In the latter case a relabeling of  and

and  is performed.

is performed.

Source: Richard L. Burden and J. Douglas Faires, Numerical Analysis, 8th edition, Thomson/Brooks/Cole, 2005.

.

, which is a right traveling wave of speed

.

defined by

.

and time step

.

To begin, set

To begin, set

denote the solution of the initial value problem

denote the solution of the initial value problem

as the difference between

as the difference between  and the specified boundary value

and the specified boundary value  .

.

has a root, and that root is just the value of

has a root, and that root is just the value of  which yields a solution

which yields a solution  of the boundary value problem. The usual methods for finding roots may be employed here, such as the bisection method or Newton’s method.

of the boundary value problem. The usual methods for finding roots may be employed here, such as the bisection method or Newton’s method. has the form

has the form

is the solution to the initial value problem

is the solution to the initial value problem

is the solution to the initial value problem

is the solution to the initial value problem

then

then  for all

for all  . Thus

. Thus  for all

for all  for all

for all  ,

,

. This is self-satisfied.

. This is self-satisfied. ,

,

.

.

.

.

, and

, and  plotted in the first figure. Inspecting the plot of

plotted in the first figure. Inspecting the plot of  and

and  . Some trajectories of

. Some trajectories of  are shown in the second figure.

are shown in the second figure. and

and  (approximately).

(approximately).

equal to

equal to  ,

,  ,

,  (red, green, blue, cyan, and magenta, respectively). The point

(red, green, blue, cyan, and magenta, respectively). The point  is marked with a red diamond.

is marked with a red diamond.